AUTHOR

Prapphal, Kanchana

TITLE The Relevance of

Language Testing Research in the Planning of Language Programmes.

PUB DATE 90

NOTE 13p.

PUB TYPE Information Analyses

(070) –– Reports – Evaluative/Feasibility (142)

EDRS PRICE MF01/PC01

Plus Postage.

DESCRIPTORS Academic Achievement;

*English for Academic Purposes; Foreign Countries; Higher Education; Language

Research; *Language Tests; Learning Theories; *Predictive Validity;

*Second Language Learning; *Second Language Programs; Testing

IDENTIFIERS *Thailand

ABSTRACT

Three research studies conducted at Thai universities involving language

testing and courses in General English and English for Academic Purposes

(EAP) are discussed. The first study examined the predictive validity

of different types of language tests on academic achievement in General

English and EAP courses. It was found that the test format, as shown

in the Matching Cloze Test, may be significant in predicting future academic

achievement, and the content of language tests may play a role in academic

achievement for each type of language program. The second study showed

the direct and indirect relationships between subskills of General English

and EAP tests. It was found that all language subskills, regardless

of content, are significantly related. The third study examined the

underlying relationships between General English and EAP tests. It

was found that EAP tests may predict achievement in EAP programs better

than General English tests; the formats associated with each discipline

tend to predict academic success in science better than those that are

not related to a specific discipline; and there is a common factor shared

by the EAP tests, General English tests, and knowledge of the subject matter

represented by student grade point average. (GLR)

*******************************************************************************************

Reproductions supplied by EDRS

are the best that can be made from the original document.

*******************************************************************************************

The Relevance

of Language Testing

Research

in the Planning of Language Programmes

Kanchana Prapphal

Chulalongkorn University Language

Institute

Bangkok, Thailand

Abstract

This paper investigates three research studies in language testing dealing with General English and English for Academic Purposes conducted at Thai universities. The first study examines the predictive validity of different types of language tests on academic achievement in General English and English for Academic Purposes courses. The second study shows the direct and indirect relationships between subskills of General English and English for Academic Purposes tests. The third study indicates the underlying relationships between General and English for Academic Purposes tests.

The findings of these three studies provide insights to those involved in the planning of language programmes. They help answer questions like “How great a role do the content and format of the test have in predicting success in future language achievement? To what extent are the subskills of General English related to those of English for Academic Purposes?”

Introduction

Language testing plays an important role in the teaching-learning process. It helps language teachers to place students at their appropriate levels, to diagnose the students’ strengths and weaknesses, and to evaluate their performance during and at the end of the course. More important, language testing can aid in the planning and administration of language programmes. This seems to be the key issue because the success or failure or any language programme depends on planning.

One major problem facing those involved in the planning and administration of language programmes is whether to start with General English (GE) or with English for Specific Purposes (ESP). Some studies have been conducted in the English for Academic Purposes (EAP) context, but the question concerning GE and EAP cannot be answered conclusively. Moy (1975) obtained a significant interaction between major-field area and text content, but the effect was not exactly as predicted. He concluded that the study failed to support the hypothesis that students would do better on materials concerning their own academic fields. Peretz (1986) studied students in science and technology, biology and humanities, and social sciences, and found a significant interaction between major-field area and text content as hypothesized, but science and technology students outperformed all the other groups. She concluded that it was not always the case that students would perform better if the content of the reading passage was related to their field of study.

Conversely, other studies provide tentative evidence in favor of the hypothesized interaction between students’ major fields and text content. Alderson and Urquhart (1985) investigated three studies and reported that academic background can play an important role in test performance. They concluded that “when these students were familiar with the content area, they were able to answer direct and overview questions with equal ease; when this familiarity with the content area was lacking, they could still answer direct questions, but their ability to answer overview questions was greatly reduced.” (p. 203)

In the Thai context, some studies have attempted to investigate the relationships between GE and EAP. This paper aims to review three research studies related to this topic and to show empirical evidence which may provide additional information useful for planners of language programmes at the university level.

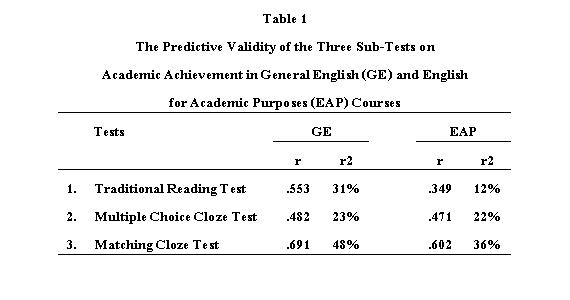

Study One1

This study involved 264 randomly selected science students who had taken the national English entrance examination in Thailand in 1982. Multiple choice cloze (MCC) and matching cloze (MC) tests, representing global knowledge of English and cognitive processing abilities, were compared with a traditional reading comprehension test (TR) which represents a less synthesized knowledge of linguistic elements. The reliability coefficients (Cronbach Alpha) for the three subtests were .908, .640 and .659 respectively. The reliability coefficient of the combined tests was .922. One hundred and thirty-nine of these subjects registered at Chulalongkorn University where they were required to take the Freshman general English course offered by the Chulalongkorn University Language Institute. 125 subjects registered at Mahidol University and they had to take the English for Academic Purposes course offered by the English Department at Mahidol University. Table 1 gives the predictive validity of the three sub-tests on academic achievement in General English and English for Academic Purposes courses.

The Pearson product-moment coefficients in Table 1 indicate that all three tests correlated significantly and substantially with university English achievement. However, the Matching Cloze Test correlated better with university achievement at both Chulalongkorn and Mahidol than the other two tests. Since the content of all three tests involved general English, the three tests account for more variance with the General English Course than with the English for Academic Purposes Course.

This study provides the following information:

1. Test format may be significant in predicting future academic achievement. A format which aims at measuring a student’s ability to integrate linguistic elements at the syntactic, semantic, and discourse levels and the student’s ability to anticipate such elements as shown by the Matching Cloze Test may predict future academic success better than a test format which does not tap students’ underlying propositional abilities.

2. The content of language tests may play a role in academic achievement for each type of language program. The three general English tests correlated more highly with the General English Course than with the English for Academic Purposes Course.

Study Two2

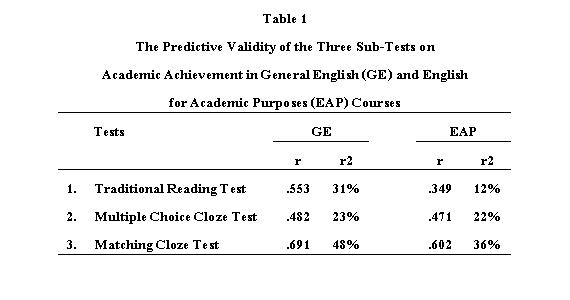

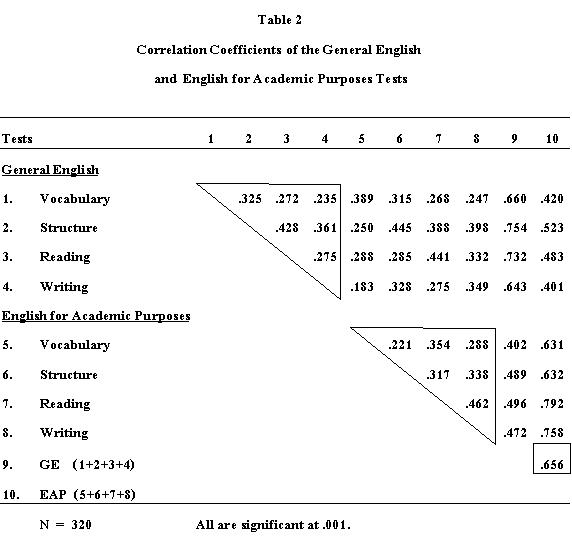

320 Chulalongkorn University students participated in this study: 160 first year students and 160 second year students. In each group, 80 students were majoring in science. The first year students studied Foundation English (FE) focusing on general English whereas the second year students studied English for Academic Purposes (EAP) emphasizing content related to each discipline.

There were two sets of tests. The first test (GE) aimed at assessing the students’ ability in understanding general English. It comprised four parts: Vocabulary (15 discrete-point multiple choice items), Structure (15 discrete-point multiple choice items), Reading (15 matching cloze items), and Writing (15 multiple choice cloze items). Similarly, the English for the Academic Purposes Test (EAP) was composed of four parts and had an identical format with the General English Test. However, the content was more discipline-specific. All subjects took both tests. The reliability coefficients of the GE and EAP tests, using the Cronbach Alpha, were .762 and .776 respectively. Table 2 yields Pearson correlation coefficients of the GE and EAP tests.

The top triangle indicates the interrelationships among the subskills of the General English Test and the bottom triangle shows the interrelationships among the subskills of the English for Academic Purposes Test. The correlation coefficients range from .221 to .462. The correlation coefficient of the two tests is .656. All correlation coefficients are significant at the .001 level.

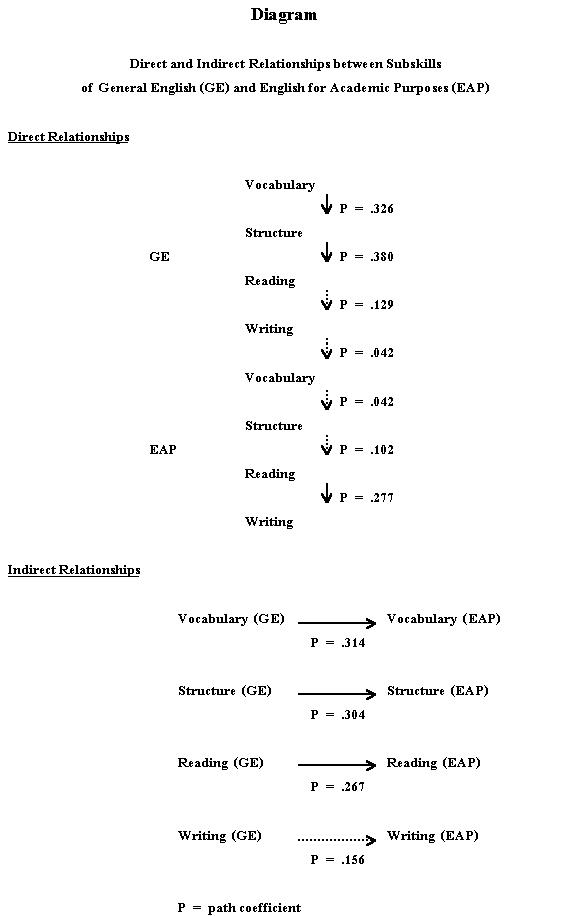

To investigate the direct and indirect relationships among subskills of the GE and EAP tests, path analyses were conducted. The following diagram illustrates the underlying relationships among subskills of the two tests.

There are significant direct relationships among subskills of the General English Test except for the reading and writing subskills. Conversely, there is no direct relationship among subskills of the English for Academic Purposes Test except for that of the reading and writing subskills. The strongest direct relationship is between the structure subskill and the reading subskill. The path coefficient is .380. There are significant indirect relationships between the subskills of General English and English for Academic Purposes. However, this conclusion does not apply to the indirect relationship between the writing subskill of the two tests.

Study Two suggests the following insights:

1. All language subskills, regardless of content, are significantly related. Therefore, there might be a transfer of subskills from one content to another.

2. The nature of the transfer of language subskills may be direct or indirect. However, a hierarchical relationship from General English to English for Academic Purposes is not confirmed by this study.

3. The transfer of language subskills across content may occur within each subskill. Students who have mastered subskills in vocabulary, structure, and reading in General English may transfer these subskills to English for Academic Purposes. However, this observation does not apply to the writing subskill in both tests.

Study Three3

One hundred first year students from the Faculty of Science at Mahidol University took part in the study. The investigator used three subtests: Sentence-Fill-In (18 items), Writing a Summary in the Native Language (20 important points), and Writing Based on a Diagram (15 items). The first two subtests aimed at assessing reading comprehension and the last measured the students’ writing ability. The first subtest was a matching cloze. The subjects had to choose the correct sentence from the given list. The second subtest required the subjects to translate the ideas from the given passage accurately. The third subtest asked the subjects to write complete sentences based on three given diagrams. The content of the three subtests was taken from scientific texts. The reliability coefficients (KR-20) of the three subtests were .799, .770, and .793 respectively.

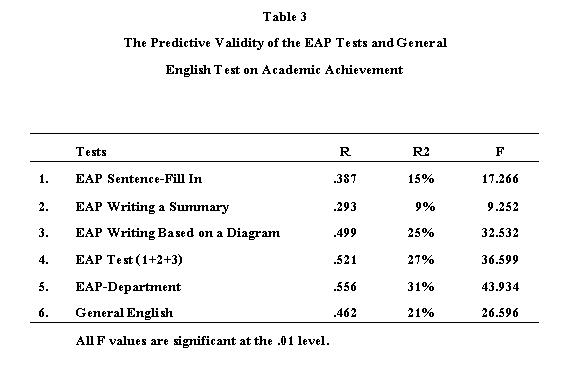

Table 3 presents regression analyses of the EAP subtests, the EAP Department Test and the University English AB Entrance Examination which assessed the general proficiency of Thai students to predict academic achievement represented by GPA.

Although all the tests were able to predict the academic achievement of the subjects, the EAP Tests were more effective than the General English Test. The EAP Test predicted 27% and the EAP-Department Test predicted 31% while the General English Test predicted only 21%. As for test format, the EAP Writing Based on a Diagram produced more valid results than the other subtests. It explained 25% of the total variance whereas the Sentence-Fill In and Writing a Summary explained only 15% and 9% respectively.

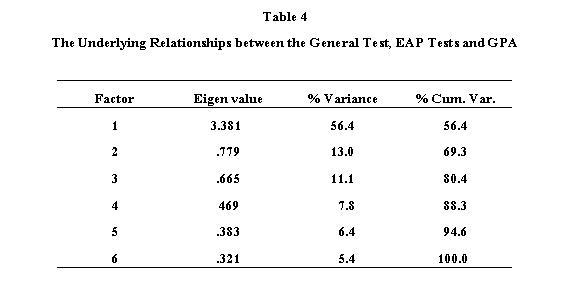

To investigate the underlying relationships between the General Test, EAP Tests and GPA, principle factor analysis specifying 6 factors was performed. Table 4 presents the results of the analysis.

It was found that the first factor or the factor common to all variables accounted for 56.4% of the total variance. There were also specific factors associated with each variable. The other five factors explained 44% of the total variance.

The third study suggests the following:

1. EAP tests may predict achievement

in EAP programs better than general English tests.

2. Formats associated with

each discipline such as “Writing Based on a Diagram” tend to predict academic

success in science better than those which are not related to the specific

discipline.

3. There is a common factor

shared by the EAP tests, General English test, and knowledge of the subject

matter represented by the GPA. However, tests of different content

involve specific factors as well. This conclusion agrees with the

“Partial Divisibility Hypothesis” proposed by Oller (1979) and supports

empirical evidence presented by Bachman and Palmer (1983) and other researchers.

Conclusions

The findings of these three studies can be applied in the planning of language programmes in Thailand, especially at the university level.

1. Content. Many programme planners are not certain whether they should start their language programmes with general English or English for specific purposes. The insights from these three studies may help answer the question, “What should we teach?” It seems that tests of the same disciplines may predict academic achievement better than tests of general content. However, programme designers need additional information. They have to assess the needs of their own students. A survey on performance objectives of learning English for communication conducted by Prapphal (1987) may provide a broad guideline for this purpose.

2. Test Format. The results of these three studies suggest that certain types of test formats may help predict academic and language achievement in the future better than others. Test formats aiming at measuring students’ ability to integrate linguistic elements at the discourse level may predict future academic and language success better than a format which does not tap students’ underlying propositional abilities. This observation tends to support the premise on which communicative language teaching is based. Communicative tasks and tests tend to integrate linguistic elements rather than separate them. Another consideration regarding test format is that there may be specific formats which can measure particular subskills. For example, “Writing Based on a Diagram” may predict performance in writing for science better than in writing for other disciplines.

3. Transfer of Skills. There may be a transfer of some language skills across content. Students appear to transfer vocabulary, structure, and reading skills in General English to those in English for Academic Purposes. However, students seem to lack the ability to transfer writing skills from General English to English for Academic Purposes. Programme planners may have to emphasize writing skills more than other skills in EAP courses.

These are some insights derived from three studies in language testing research in the context of English teaching at the university level in Thailand. They help explain some aspects of the relationships between General English and English for Academic Purposes. It is hoped that the results may facilitate the work of language programme designers in improving language teaching and testing in Thailand.

References

Alderson, J.C. and Urquhart, A.H. 1985. The effect of students’ academic discipline on their performance on ESP reading tests. In Language Testing, 2, 2: 192 – 204.

Bachman, L.S. and Palmer, A. 1983. The construct validity of the FSI oral interview. In J.W. Oller, Jr. (Ed.), Issues in language testing research. Rowley, Mass.: Newbury House Publishers, Inc.

Julasai, D., Prapphal, K., Chanpothikul, C., Opanonamta, P., Aue-Apaikul, P., Nakwiroj, P., Wuthikaro, S., Rongsa-ard, W. and Kangwanpornsiri, W. 1988. Stages of language acquisition of Chulalongkorn University students. Chulalongkorn University Language Institute.

Moy, R.H. 1975. The effect of vocabulary clues, content familiarity and English proficiency on cloze scores. Unpublished Master’s Thesis, University of California, Los Angeles.

Oller, J.W., Jr. 1979. Language tests at school. Longman.

Peretz, A.S. 1986. Do content area passages affect student performance on reading comprehension tests? Paper presented at the twentieth meeting of the International Association of Teachers of English as a Foreign Language, Brighton, UK., April 1986.

Prapphal, K. 1987. The use of performance-based tests in language program evaluation. In Trends in language programme evaluation. A. Wangsothorn, K. Prapphal, A. Maurice, and B. Kenny (Eds.), Bangkok: Chulalongkorn University Language Institute.

Prapphal, K., Pas., K., and Tanapongpipat, S. 1984. Modified cloze tests as predictive measures of language acquirers and academic achievers. PASAA. 14: 75 – 89.

Thammarakkit, P. 1989. An investigation of general and ESP tests for first year students at Mahidol University. Unpublished Master’s Thesis. Mahidol University.

Notes

1. The data were taken from “Modified cloze tests as predictive measures of language acquirers and academic achievers” by Prapphal, K., K. Pas and S. Tanapongpipat. PASAA 14: 75 – 89, 1984.

2. The data were taken from “Stages of language acquisition of Chulalongkorn University students” by Julasai, D. et al. Chulalongkorn University Language Institute, 1988.

3. The data were taken from “An investigation of general and ESP tests for first year students at Mahidol University” by Phennapha Thammarakkit. A master’s thesis at Mahidol University, 1989.